Did you read the consent form bb

How many of you have used OpenAI's DALL-E, GPT? What about Google's Bard, Meta's LLaMA, Microsoft's Bing? Most of you.

How many of you actually read the privacy policy, terms of service behind these AI products? Not many of you.

If it makes you feel better, you're not alone. ChatGPT had more than 100 million users within the first 2 months, 13 million users daily etc. It's a sticky product and users have gotten really reliant on it. I tried to generate a DALL-E image for this blog and couldn't access the site due to user overload. Same with ChatGPT.

I know people who use ChatGPT to write investor updates, get medical advice, draft books, generate startup ideas, bootstrap apps for their side gigs etc. Similarly, people use DALL-E to edit images, design logos, draft website user interfaces etc.

Quick reminder before we move on: ChatGPT is a Large Language Model (LLM) trained using Reinforcement Learning from Human Feedback.

This means that the more users, the more data, the quicker the AI learns. Every single time you use ChatGPT, you freely hand over your data to make the AI more powerful.

Data is the ugly best friend

I started working in AI in 2015. I joined a tiny startup to build out the ML Data and Infrastructure team. This was well before data in AI was a thing. In the 7+ years I spent working on ML Data, it felt ancillary to the model.

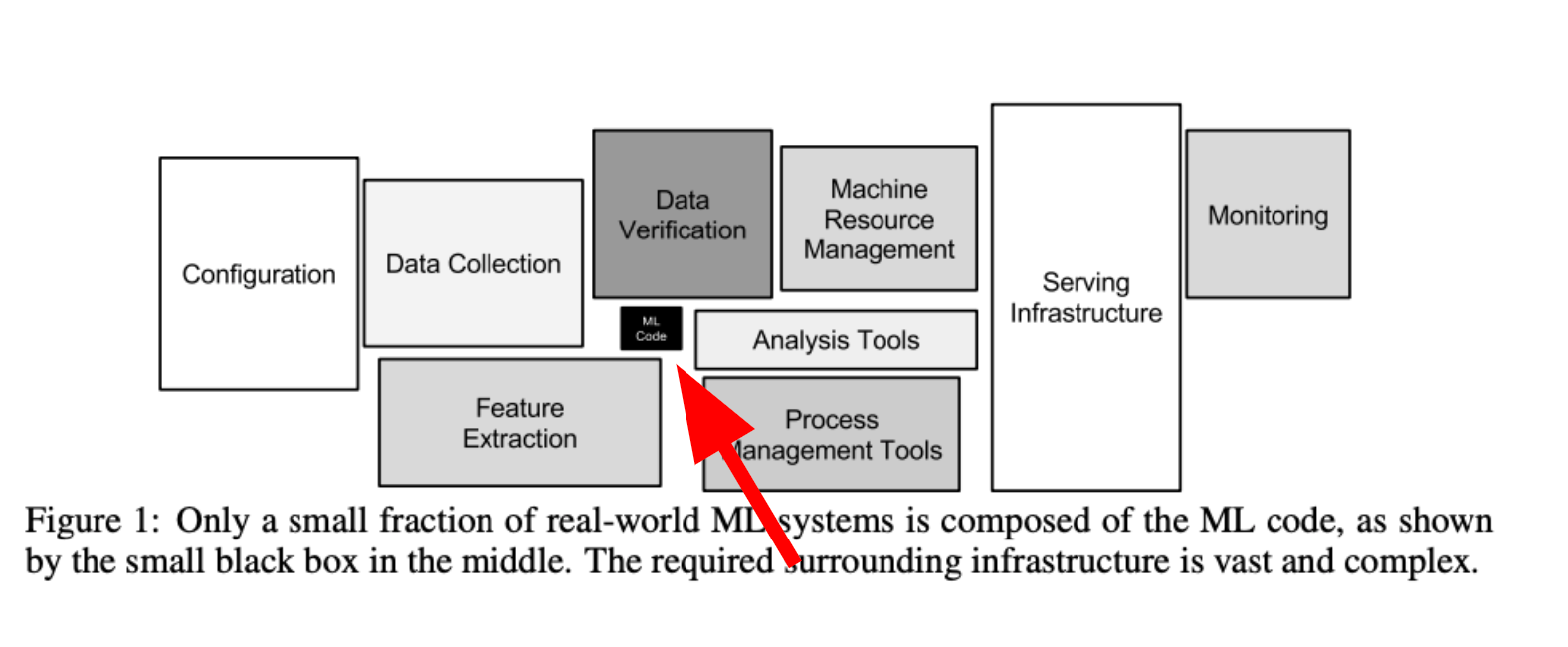

A lot of people don't know this but the actual ML code is a small portion of the overall ecosystem. The chart below is my favorite visual of the ML ecosystem. The tiny black box is the ML Code. The remainder is largely data & infrastructure.

As you can see, data is a huge component of the AI ecosystem. Your personal information is a huge component of the AI ecosystem. Back to my original question: did you read the consent form?

What data are you giving out

Let's dive into the legal docs on OpenAI's site to figure out what data you're giving out. Disclaimer: I am not a lawyer.

The privacy policy, data usage policy, and terms of use docs are an obvious place to start. It shouldn't come as a surprise that pretty much nothing is private. You're freely & happily handing out a bunch of your personal information. Some obvious types of data you're giving out:

- Account Information. Ok this is basic. Your name, contact information, account credentials, payment card etc.

- User Content. Also decently obvious. This is the information that is included in the input, file uploads, or feedback

- Communication Information. So, contact information, messages sent etc.

- Social Media Information. OpenAI collects again things like your contact details on socials etc.

Moving on to the less obvious data you're giving out:

- Log Data: Log data means IP address, geolocation, browser type and settings, date and time of use.

- Usage Data: Types of content that you view or engage with, the features you use and the actions you take. Your time zone, country etc.

- Device Information: Type of computer or mobile device, computer connection, operating system, browser etc.

- Cookies. Standard.

Taking all of this data together, every time you use GPT, you're building an increasingly thorough profile of who you are, what you're like, what you do, where you are.

Stepping back, I don't love that I have logged in from every device I own. I don't love that I logged in from my home address, my work address, my parents address etc. I also don't love certain personal prompts I have used.

What is your data used for

So, it's obvious that we've all handed OpenAI a ton of data. What is our data used for at OpenAI?

- Research (feels like a broad statement).

- Maintain, improve services (so, improve the AI).

- Communicate with users (ok, we love customer support).

- Develop new services (wen GPT5?).

- Prevent fraud (unclear but sounds fine).

- Comply with legal obligations.

The tldr here is that you're making the AI stronger, more accurate and efficient. You're also giving OpenAI a pretty robust picture of who you are as a person.

OpenAI can share any aggregated information with 3rd parties, publish the information or make the information "generally available". The good news is that OpenAI strives to de-identify the personal information – basically roll up the data to anonymize it.

Double click on 3rd parties

I mentioned 3rd parties. It's worth emphasizing that OpenAI can share user data with 3rd parties without notifying the user (unless required by law in certain jurisdictions). Types of 3rd parties:

- Vendors and Service Providers. Feels broad because it is. Cloud services, website services, vendors, web analytic services etc.

- Affiliates. Affiliate means an entity that OpenAI controls or that is controlled by OpenAI.

- Business Transfers. Less intuitive but this includes things like bankruptcy cases, reorgs, transition of service to another provider.

- Legal Requirements. Also obvious but not intuitive. If the law wants the data, the legal entity gets the data.

The 3rd party clause is really vague and broad. The odds of you knowing where your data is going, is pretty slim. Let me know if you disagree.

Data controls in place

The good news is that there are some data controls in place.

OpenAI does not "knowingly" collect personal information from children who are under the age of 13. The problem with the word "knowingly" is that it is hard to enforce. It is largely on the user to gatekeep. Users can email legal (legal@openai.com) if the user thinks a child under 13 has been using the service. This is helpful but how realistic and scalable is this?

You own the input data and the content generated. You can sell or publish the content you generate. OpenAI has access to use it though. This sounds great for you but it also pretty much puts all liability on you to not violate any ethics or legal policies while letting OpenAI use your content.

OpenAI doesn't want you to mislead people & wants you to disclose that you are using their tools. "We encourage you to proactively disclose AI involvement in your work". This is great but again, very hard to implement. How many people have you seen actually put a disclaimer? What are the reprecussions? Who is actually monitoring this?

OpenAI wants you to respect the right of others. So, please don't upload images or info about other people without their consent. Again, how is this actually enforced and monitored?

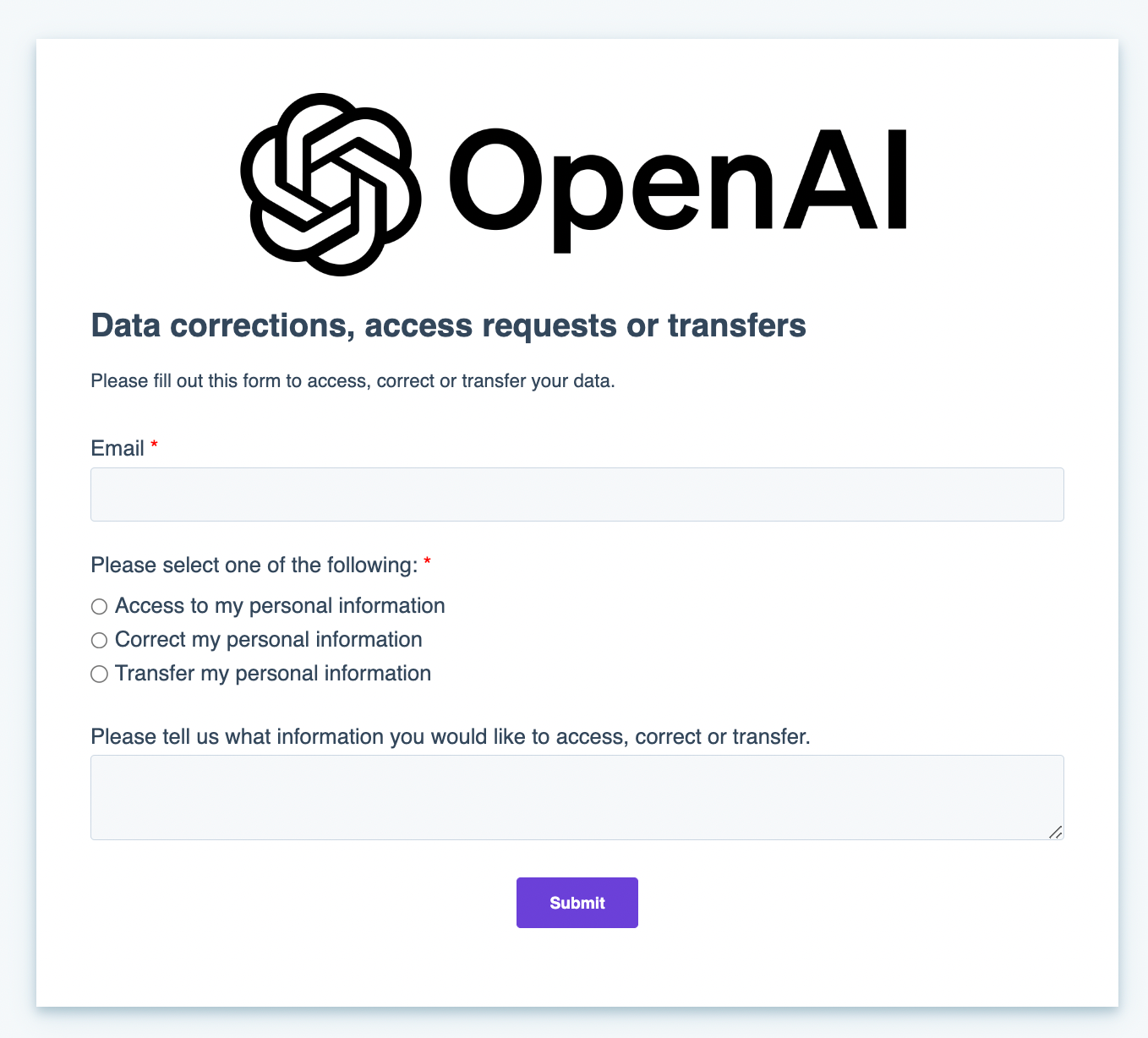

OpenAI puts a lot of measures in place for protected users largely due to GDPR. GDPR is essentially the EU data protection legislation that governs PII (personally identifiable information). It's the toughest data privacy law in the world today. There are huge penalties against those who breach GDPR privacy standards. Dependent on your location, you can access your PII or delete it. There's a form which doesn't feel that advanced but it is what it is.

How long does OpenAI have my data

OpenAI keeps your personal information for as long as it needs "to provide service to you". The duration depends on data sensitivity and the law. Again, really vague. The kicker is that if OpenAI anonymizes your data, it can keep it indefinitely "without further notice to you."

Where does this leave us

Pretty much everyone has used DALL-E or GPT. Pretty much no one pays attention to legal policies. We've all handed over a ton of personal data. We should be hyper aware of what data we are giving out and where that data is going.

AI is getting stronger by the click. Building responsible AI starts with the input not the algorithm. The onus to educate is on both the company and user.

Personally, I am definitely going to continue using GPT & DALL-E. That said, I am also going to be a lot more wary of what type of data I share with these tools as well as where I use these tools.

If you have any questions, you can contact OpenAI support. Just remember that OpenAI will collect that data & use the content pretty much in perpetuity.